- Restructure Archive

- :

- User Groups

- :

- Europe, Middle East and Africa

- :

- Luxembourg User Group

- :

- Forum

- :

- Developing reliable searches dealing with events i...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone,

I am concerned about single-event-match (e.g. observable-based) searches and the eventual indexing delay events may have.

Would the usage of accelerated DM allow me to just ignore something like the below, whilst still ensuring that such an event will be anyhow taken into account? If so, how?

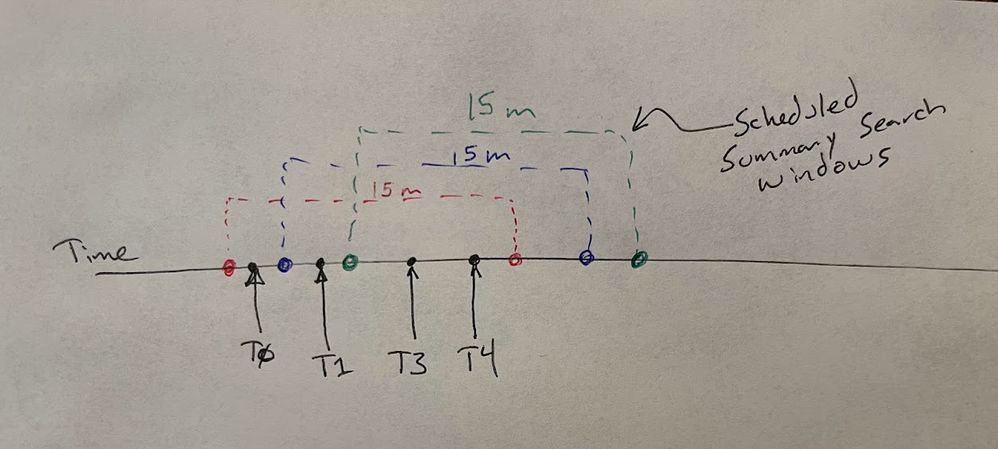

I read Data Models "faithfully deal with late-arriving events with no upkeep or mitigation required", however I am still concerned on what would happen in a case such as the one depicted in the image I'm uploading, where:

- T0 is the moment when the event happened / was logged (_time)

- T1 is the first moment taken into account by the search (earliest)

- T2 is the moment when the event was indexed (_indextime)

- T3 is the last moment taken into account by the search (latest)

What about, instead, taking a "larger" time frame for earliest / latest and then focus on the queue of events happened between _index_earliest / _index_latest ? Would this ensure that each and every single event is taken into account with such a search? (Splunk suggests "When using index-time based modifiers such as _index_earliest and _index_latest, [...] you must run ...", and although I'm not entirely sure about the performances impacts of doing so while still filtering by _indextime, I think it would still be a good idea to account for an ideal maximum events lag, still big but not too big, e.g. 24h, similar to the one mentioned here https://docs.splunk.com/Documentation/Splunk/9.1.1/Report/Durablesearch#Set_time_lag_for_late-arrivi... , for which the surpassing could generate an alert on its own )

Are there different and simpler ways to achieve such mathematic certainty, regardless of the indexing delay? (of course, given that the search isn't skipped)

Thank you all

Ps. Same question, asked in the generic forum: https://community.splunk.com/t5/Splunk-Enterprise-Security/Developing-reliable-searches-dealing-with...

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In regard to your questions:

- Would such an event produce an alert, regardless of _time, if the search relies on DM/tstats?

If an event comes in late (i.e. _indextime is way after _time), as long as you search over those events based on _time after it was indexed (aka the time picker or using earliest/latest criteria), then you would still find that data with your query.

- Would it be better instead to use the _index_earliest / _index_latest approach?

Not sure if it is necessarily better, but I have typically tried to solve things looking at the _time data first, and if there is a lot of indexing lag happening then that is indicative of something that needs to be addressed in the overall observability architecture and accounted for.

- Is there a better and/or simpler approach?

I'm not sure if this is the best way...but one way. 🙂 Based on what I understand I would probably split this into two separate alerts. It also sounds like this is a pretty critical situation you want to keep an eye on.

1. The alert for the file hash, running every 15m, but I would make the lookback more than just 15m. If an event comes in late that was missed by the last run of this alert then even if _indextime is now, _time should be back then when you missed it. So if you're looking back farther each execution of the schedule, you'd still catch the "new" event that showed up late if you're basing things off _time.

2. A second alert can be created that watches the spread of _time and _indextime for that data/sourcetype/source/host/whatever. I've done this before in critical situations as a pre-warning that "stuff could be going bad." You could keep track of a long-term and short-term median of that _indextime-_time difference. If you summarize this (or, since it is so simple even just throw it in a lookup) you can include this info in the alert for #1 as a "We didn't find anything bad...but things might be heading in that direction based on index delay..."

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You're definitely on the right track, and data models are one way to handle this. There are other considerations with data models, but here are a few posts diving into your question:

Managing Late Arriving Data in Summary Indexing - Splunk Community

Summary Indexing vs. Data Model Acceleration - whi... - Splunk Community

When I have handled this with summary indexing I've done a rolling query window sort of like you said.

Assumptions:

- Each transaction had multiple log events during processing

- Each event (e.g. T0, T1, etc) was easily distinguished and linkable because they had a common field - like a transaction identifier

- Each event (e.g. T0, T1, etc) had a particular state that was logged with it, like START, PARSE, STORE, END.

- Under normal circumstances these transactions should finish within a minute, but we decided the worst case scenario was 10 minutes to complete

Summary Indexing Search

- Schedule the summary indexing report to run every 5 minutes, with a 15 minute lookback (e.g earliest=-15m@m latest=@m)

- Only summarize transactions that started within the first 5 minutes of that 15m window (thus giving them 10m to finish)

Here's a diagram I made in _JP Paint to show how these windows overlap and where your Tx events could appear:

Now, you still could have unclosed transactions with this summary. If things took longer than your 10m assumption, or let's say there was a network outage and Splunk couldn't index data from a forwarder for a couple hours.

So, this can be improved upon and you can summarize everything in your window, and then re-summarize it daily/weekly. Just keep in mind that summary indexing (and even data model stuff) is essentially trying to reduce the amount of events you need to search through on a regular basis.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @_JP

Summary Indexing is something we are considering for other cases, however this one focuses on single events, not correlating them.

Re-reading my question, I realized my concern was not very clear. Sorry for that.

Basically I am worried about having events not being taken into account / considered / computed / filtered-in within a certain search, although they should have been, and that this happen due to indexing delay. And since the "_time" of these events is less important than the fact that they are searched against the SPL logic when they arrive / are indexed, I'm wondering whether using _index_earliest / _index_latest instead of earliest / latest is the best way to make sure that each and every single event that should be considered by the search, actually is.

e.g. Let's say I have a file hash and my search is scheduled to run every 15min. The goal of such search is, taking into account a meaningful set of logs including such field, scan each single event and raise an alert if there is a match with such hash. Let's also say that for some reason Splunk has just received an event with exactly that file hash where its _time is 20min ago:

- Would such an event produce an alert, regardless of _time, if the search relies on DM/tstats?

- Would it be better instead to use the _index_earliest / _index_latest approach?

- Is there a better and/or simpler approach?

Thank you again

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In regard to your questions:

- Would such an event produce an alert, regardless of _time, if the search relies on DM/tstats?

If an event comes in late (i.e. _indextime is way after _time), as long as you search over those events based on _time after it was indexed (aka the time picker or using earliest/latest criteria), then you would still find that data with your query.

- Would it be better instead to use the _index_earliest / _index_latest approach?

Not sure if it is necessarily better, but I have typically tried to solve things looking at the _time data first, and if there is a lot of indexing lag happening then that is indicative of something that needs to be addressed in the overall observability architecture and accounted for.

- Is there a better and/or simpler approach?

I'm not sure if this is the best way...but one way. 🙂 Based on what I understand I would probably split this into two separate alerts. It also sounds like this is a pretty critical situation you want to keep an eye on.

1. The alert for the file hash, running every 15m, but I would make the lookback more than just 15m. If an event comes in late that was missed by the last run of this alert then even if _indextime is now, _time should be back then when you missed it. So if you're looking back farther each execution of the schedule, you'd still catch the "new" event that showed up late if you're basing things off _time.

2. A second alert can be created that watches the spread of _time and _indextime for that data/sourcetype/source/host/whatever. I've done this before in critical situations as a pre-warning that "stuff could be going bad." You could keep track of a long-term and short-term median of that _indextime-_time difference. If you summarize this (or, since it is so simple even just throw it in a lookup) you can include this info in the alert for #1 as a "We didn't find anything bad...but things might be heading in that direction based on index delay..."