- Splunk Answers

- :

- Splunk Administration

- :

- Getting Data In

- :

- Re: Create custom fields at indextime on Heavy For...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark Topic

- Subscribe to Topic

- Mute Topic

- Printer Friendly Page

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Create custom fields at indextime on Heavy Forwarders

Hi at all,

I have to create a custom field at index time, I did it following the documentation but there's something wrong.

The field to read is a parte of the source field 8as you can read in the REGEX.

I deployed using a Deployment Server on my Heavy Forwarders an app contaning the following files:

- fields.conf

- props.conf

- transforms.conf

in fields.conf I inserted

[fieldname]

INDEXED = True

in props.conf I inserted:

[default]

TRANSFORMS-abc = fieldname

in transforms.conf I inserted:

[fieldname]

REGEX = /var/log/remote/([^/]+)/.*

FORMAT = fieldname::$1

WRITE_META = true

DEST_KEY = fieldname

SOURCE_KEY = source

REPEAT_MATCH = false

LOOKAHEAD = 100

where's the error? what I missed?

Thank you for your help.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

https://docs.splunk.com/Documentation/Splunk/latest/Admin/Transformsconf#KEYS:

SOURCE_KEY = MetaData:Source

BTW, you don't need fields.conf on the HF. You need it on SH.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PickleRick and @isoutamo ,

Thank you for your yints,

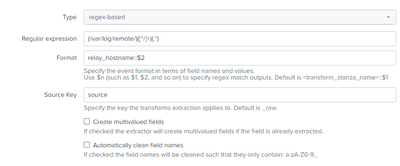

this is the new transforms.conf

[relay_hostname]

REGEX = (/var/log/remote/)([^/]+)(/.*)

FORMAT = relay_hostname::$2

WRITE_META = true

#DEST_KEY = relay_hostname

SOURCE_KEY = MetaData:Source

REPEAT_MATCH = falseI tried with your hints but they don't run, what could I try again?

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I didn't notice that it was in the [default] stanza. I'm not sure but I seem to recall that there was something about it and applying a "default transform".

Stupid question but just to be on the safe side - you don't have any HF before this one? (so you're doing all this on the parsing component, not just getting parsed data from earlier, right?). Oh, and remember that if you're using indexed extractions, they are parsed at UF so your transforms won't work on them later.

Anyway, assuming that it's done in the proper spot in the path, I'd try something like that to verify that the transform is being run at all.

[relay_hostname]

REGEX = .

FORMAT = relay_hostname::constantvalue

WRITE_META = true

SOURCE_KEY = MetaData:Source

REPEAT_MATCH = false

Anyway, with

(/var/log/remote/)([^/]+)(/.*)

you don't have to capture neither first nor last group. You just need to capture the middle part so your regex can as well just be

/var/log/remote/([^/]+)/.*

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PickleRick ,

I also tried to associate the transformation to a sourcetype and it doesn't work:

In props.conf:

[cisco:ios]

TRANSFORMS-relay_hostname = relay_hostname

[cisco:ise:syslog]

TRANSFORMS-relay_hostname = relay_hostname

[f5:bigip:ltm:tcl:error]

TRANSFORMS-relay_hostname = relay_hostname

[f5:bigip:syslog]

TRANSFORMS-relay_hostname = relay_hostname

[fortigate_event]

TRANSFORMS-relay_hostname = relay_hostname

[fortigate_traffic]

TRANSFORMS-relay_hostname = relay_hostname

[infoblox:audit]

TRANSFORMS-relay_hostname = relay_hostname

[infoblox:dhcp]

TRANSFORMS-relay_hostname = relay_hostname

[infoblox:dns]

TRANSFORMS-relay_hostname = relay_hostname

[infoblox:file]

TRANSFORMS-relay_hostname = relay_hostname

[juniper:junos:firewall]

TRANSFORMS-relay_hostname = relay_hostname

[juniper:junos:switch]

TRANSFORMS-relay_hostname = relay_hostname

[pan:system]

TRANSFORMS-relay_hostname = relay_hostname

[pan:traffic]

TRANSFORMS-relay_hostname = relay_hostname

[pan:userid]

TRANSFORMS-relay_hostname = relay_hostname

[pps_log]

TRANSFORMS-relay_hostname = relay_hostnamein transforms.conf:

[relay_hostname]

REGEX = /var/log/remote/([^/]+)/.*

FORMAT = relay_hostname::$1

WRITE_META = true

SOURCE_KEY = MetaData:Source

REPEAT_MATCH = falseand also tried:

[relay_hostname]

INGEST_EVAL = relay_hostname = replace(source, "(/var/log/remote/)([^/]+)(/.*)","\2")but both thies failed!

Thank you for your support, have you any other idea, wher to search the issue?

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

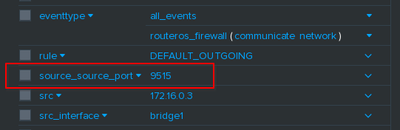

OK. Something is definitely weird with your setup then,

I did a quick test on my home lab.

# cat props.conf

[routeros]

TRANSFORMS-add_source_based_field = add_source_based_field

# cat transforms.conf

[add_source_based_field]

REGEX = udp:(.*)

FORMAT = source_source_port::$1

WRITE_META = true

SOURCE_KEY = MetaData:Source

REPEAT_MATCH = false

As you can see, for events coming from my mikrotik router it calls a transform which adds a field called source_source_port containing the port number extracted from the source field.

And it works.

So the mechanism is sound and the configuration is pretty OK. Now the question is why it doesn't work for you.

One thing which can _sometimes_ be tricky here (but it's highly unlikely that you have that problem with all your sourcetypes) is that it might be not that obvious which config is effective when your sourcetype is recast on ingestion because props and transforms are applied only for the original sourcetype even if it's changed during the processing in the pipeline (I think we already talked about it :-)) .

But as far as I recognize some of your sourcetypes at least some of them are not recast (pps_log for sure, for example)

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @PickleRick and @isoutamo ,

I also tried to solve the issue at search time, but there are many sourcetypes to associate this field, so I tried to create a field extraction to associate to source=/var/log/remote/*, but it still doesn't run, probably because I cannot use the jolly char in a source for field extractions.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @isoutamo ,

thank you for your hint, but using INGEST-EVAL, I can use an eval function, instead I need to use a regex to extract a field from another field.

The correct way is the first I used but there's something wrong and I don't understand what.

Maybe the source field isn't still extracted when I try to extract with a regex a part of the path.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On those cases I have take some part of source (e.g. yyyymmmdd from file path) and use it as a field value.

Basically replace is a one way to use regex on splunk.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @isoutamo ,

I tried your solution:

[relay_hostname]

INGEST_EVAL = relay_hostname = replace(source, "(/var/log/remote/)([^/]+)(/.*)","\2")with no luck.

As I said, I have the doubt that I would extract the new field from the source field that maybe isn't still extracted!

I also tried a transformation at search time:

with the same result.

thank you and ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on your example data etc. this works.

| makeresults

| eval source="/var/log/remote/abc/def.xyx"

| eval relay_hostname = replace(source, "/var/log/remote/([^/]+)/.*","\1")So it should work also on props.conf!

Are you absolutely sure that those sourcetype names are correct on your props.conf and that there are not any CLONE_SOURCETYPE etc. which can lead to wrong path? You should also check that there is no host or source definitions which overrides that sourcetype definition.

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @isoutamo ,

yes I have some CLONE_SOURCETYPE, but I applied the transformation in props.conf in the default stanza:

[default]

TRANSFORMS-abc = fieldname

and this should be applied to all the sourcetypes.

Maybe I could try to apply to source:

[source::/var/log/remote/*]

TRANSFORMS-abc = fieldnameCiao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

At least earlier I have had some issue to use [default]. The end result was that I must move those to actual sourcetype definition or otherwise those didn't affect as I was hopping.

Also CLONE_SOURCETYPE has some caveat when you want to manipulate it. I think that @PickleRick has some case on last autumn about this, where we try to solve same kind of situation?

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @isoutamo ,

the use of [default] stanza is a must because I have many sourcetypes and I would avoid to write a stanza for each of them.

For this reason I also tried to use [source::/var/log/*] but it didn't run!.

Anyway, there isn't any othe HF before of these because this is an rsyslog server that receives syslogs.

Thank you, have you any other check that I could try?

Now I'm trying using a fixed string to understand if the issue is in the regx or in the [default] stanza.

Ciao.

Giuseppe

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark Message

- Subscribe to Message

- Mute Message

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content